Language Learning

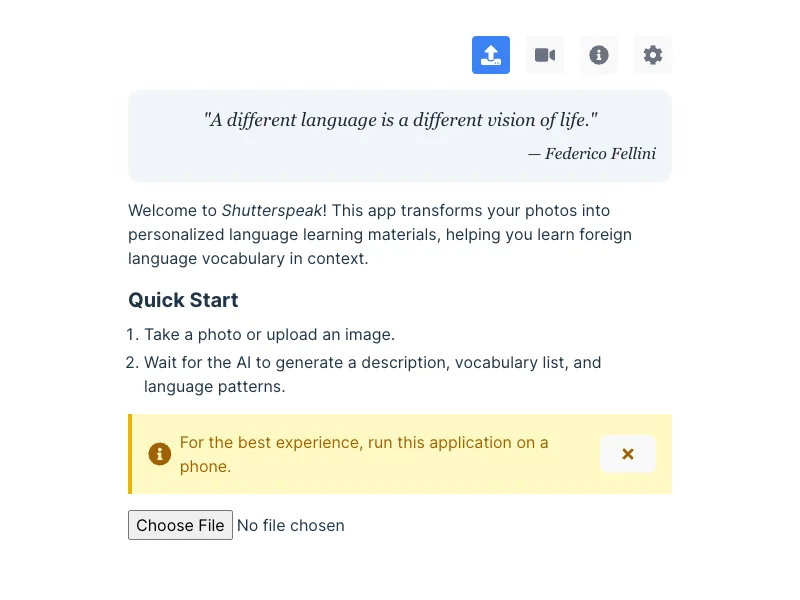

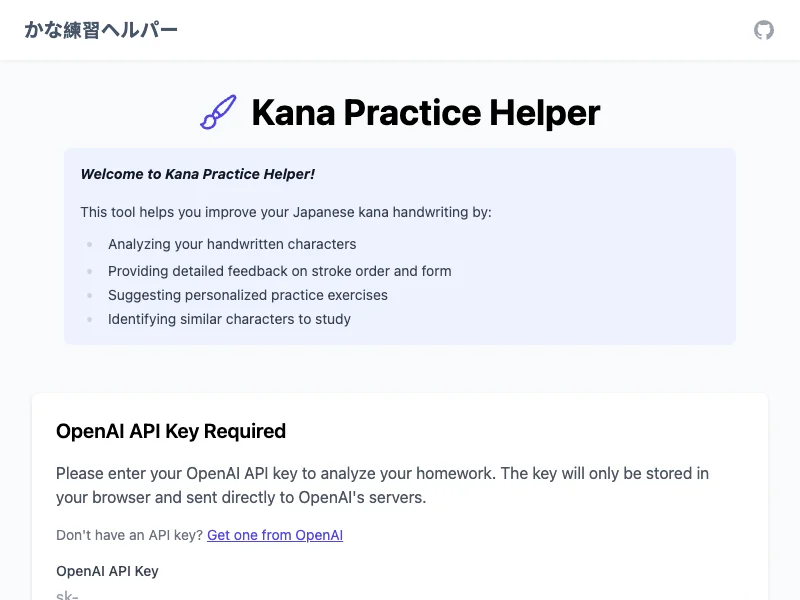

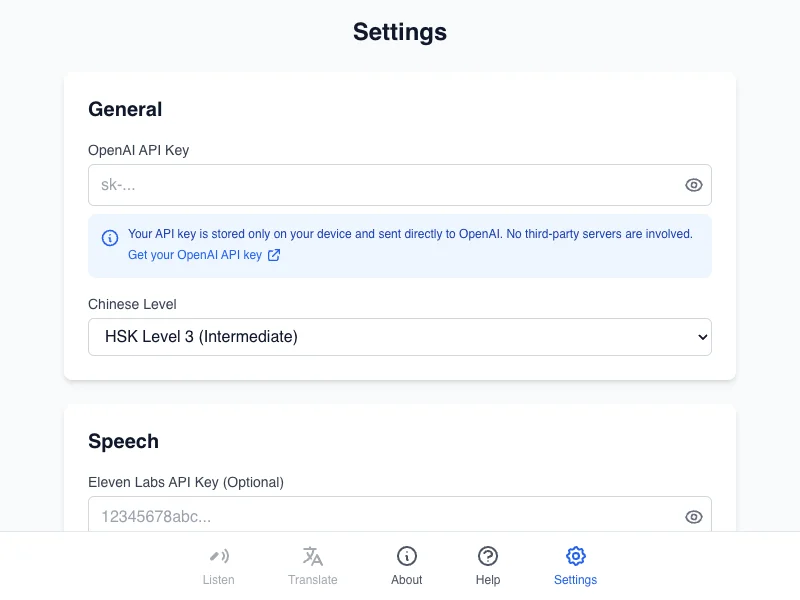

Tools and applications to assist in learning foreign languages

Web Applications

Mandarin Sentence Practice

Web application for practicing reading and listening to Mandarin Chinese sentences.

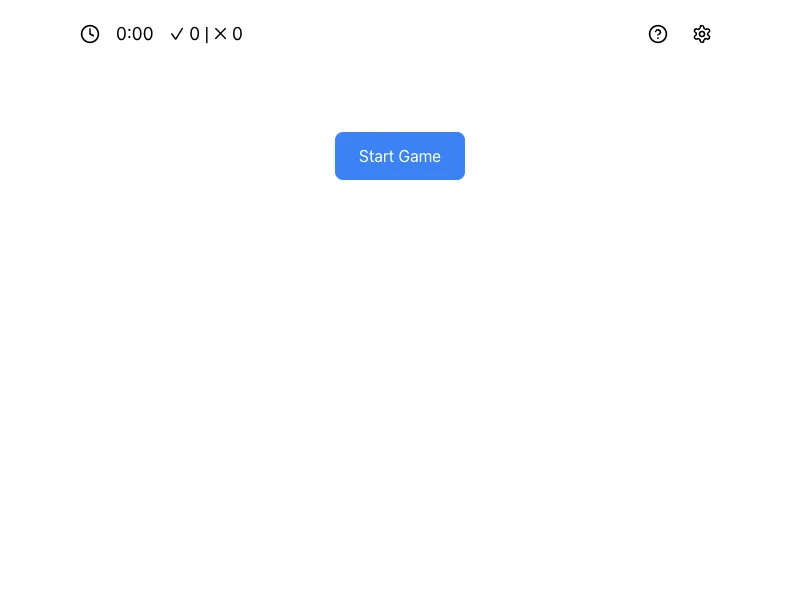

ⓘKana Game

An interactive game for learning Japanese kana characters.

Command Line Tools

Labelingo

Annotate UI screenshots with translations for language learning.

labelingo screenshot.png# Basic usage

labelingo screenshot.png

# Translate to a specific language (e.g., French)

labelingo screenshot.png -l fr

# Process multiple files

labelingo *.png -o translated/

# Install with additional OCR support

pip install 'labelingo[ocr]'

Add2Anki

A CLI tool to add language learning cards to Anki, with automatic translation and audio generation.

ⓘadd2anki 'Hello, how are you?'# Basic usage (uses Google Translate TTS by default)

add2anki 'Hello, how are you?'

# Start interactive mode

add2anki

# Specify a deck

add2anki --deck 'Chinese Vocabulary' 'u4f60u597d'

# Use ElevenLabs for high-quality audio

add2anki --tts-provider elevenlabs 'Bonjour, comment allez-vous?'

Audio2Anki

Convert audio and video files into Anki flashcard decks with translations.

audio2anki input.mp3# Process an audio file with default settings

audio2anki input.mp3

# Specify source and target languages

audio2anki input.mp3 --source-language japanese --target-language english

# Process with voice isolation (uses ElevenLabs API)

audio2anki --voice-isolation input.m4a

# Configure settings

audio2anki config set use_cache true

Subburn

Create videos with burnt-in subtitles from audio or video files.

subburn audio.mp3 subtitles.srt# Create a video with subtitles from an audio file and subtitle file

subburn audio.mp3 subtitles.srt

# Automatic transcription using OpenAI Whisper

subburn audio.mp3

# Create a video with a still image background

subburn input.mp3 subtitles.srt background.jpg

# Add subtitles to an existing video

subburn video.mp4 subtitles.srt

# Specify custom output path

subburn input.mp3 subtitles.srt -o output.mov

Other Projects

Speech Provider

Python package for accessing text-to-speech APIs in a uniform way.

from speech_provider import get_voice_provider# Import the package

from speech_provider import get_voice_provider

# Basic usage

provider = get_voice_provider()

# Get available voices for a specific language

voices = provider.get_voices(lang='en-US')

if voices:

# Create and play an utterance with the first available voice

utterance = voices[0].create_utterance('Hello, welcome to my application!')

# Start speaking

utterance.play()

# Use with Eleven Labs for higher quality voices

elevenlabs_provider = get_voice_provider(

elevenlab_api_key='your-api-key',

cache_max_age=3600 # Cache for 1 hour

)

# Get default voice for a language

default_voice = elevenlabs_provider.get_default_voice(lang='zh-CN')

if default_voice:

utterance = default_voice.create_utterance('你好,欢迎使用我的应用程序!')

utterance.play()

# Get voices for specific language

voices = provider.get_voices(lang='fr-FR')

for voice in voices:

print(f"Voice: {voice.name}, Language: {voice.lang}")

Contextual Language Detection

A context-aware language detection library that improves accuracy by considering document-level language patterns.

ⓘfrom contextual_langdetect import contextual_detect# Basic usage with context-awareness

from contextual_langdetect import contextual_detect

# Example multilingual text

sentences = [

"你好。", # Chinese

"你好吗?", # Chinese

"很好。", # Could be detected as Japanese without context

"我家也有四个,刚好。", # Chinese

"那么现在天气很冷,你要开暖气吗?", # Chinese (Wu dialect)

"Okay, fine I'll see you next week.", # English

"Great, I'll see you then." # English

]

# Context-aware detection (default)

languages = contextual_detect(sentences)

print(languages) # ['zh', 'zh', 'zh', 'zh', 'zh', 'en', 'en']

# Context-unaware detection

languages = contextual_detect(sentences, context_correction=False)

print(languages) # ['zh', 'zh', 'ja', 'zh', 'wuu', 'en', 'en']

# Get language distribution in a document

from contextual_langdetect.detection import count_by_language

mixed_sentences = [

"Hello world.", # English

"Bonjour le monde.", # French

"Hallo Welt.", # German

"Hello again." # English

]

counts = count_by_language(mixed_sentences)

print(counts) # {'en': 2, 'fr': 1, 'de': 1}

# Get the majority language in a document

from contextual_langdetect.detection import get_majority_language

majority = get_majority_language(mixed_sentences)

print(majority) # 'en'

About These Projects

These tools were created to support my own language learning journey with Mandarin Chinese.

All projects are open source and available on GitHub . Feel free to contribute or adapt them for your own use.